Taking into account today’s realities, it’s a challenge to balance deep user research with tight deadlines. Unmoderated usability testing offers a solution: a scalable, flexible way to gather feedback without spending hours coordinating schedules or moderating sessions.

But how do you make sure your results are meaningful and your tests are worth the effort? At Eleken, we’ve run countless user tests, so in this guide, we’ll cover the basics of unmoderated testing, walk through each step of the process, and explore the tools and insights you need to make your tests a success. Along the way, we’ll draw from the experiences of UX professionals to highlight what works and what doesn’t.

First, let’s start with the fundamentals: What exactly is unmoderated usability testing, and how does it compare to other methods?

What is an unmoderated usability test?

An unmoderated usability test is a type of usability testing where participants complete tasks or interact with a product on their own, without real-time guidance or supervision from a moderator.

This approach allows you to gather insights on how users interact with your design in their own environment and at their own pace. You can test a high-fidelity prototype or evaluate navigation on a live website and get a natural, unbiased look at user behavior.

How it works

At its core, unmoderated user testing involves three key components:

- A goal: What specific aspect of your design or product are you trying to evaluate?

- Clear instructions: Tasks or scenarios that guide users through what you want to test.

- A tool: Software that records user interactions, collects feedback, and provides analytics

How it compares to moderated testing

The biggest difference between unmoderated and moderated usability testing is the level of control. Moderated tests involve a researcher actively observing and engaging with participants in real-time. This allows for follow-up questions, clarification, and probing deeper into user behaviors.

Unmoderated tests, on the other hand, rely on well-structured tasks and automated tools. They are best suited for situations where you need to test at scale or when scheduling live sessions isn’t practical.

When to use moderated vs unmoderated testing?

Unmoderated user testing shines in specific contexts:

- High-fidelity prototypes: When your design is almost ready and you need final validation.

- Simple workflows: Straightforward tasks where users are unlikely to need assistance.

- Content clarity: Evaluating whether messaging or navigation resonates with users.

- Understanding a problem well: If you have a good idea of what is wrong with your product, you can create a well-structured, unmoderated scenario that would give you the needed insights.

However, it may not be ideal for early-stage prototypes or complex workflows that require a moderator to probe deeper into user behavior. For example, if you know that your website or product has solid issues, choose moderated testing. It will allow you to explore much more detail.

You can also learn more about user research in our comprehensive guide to UX research process.

Now, let’s dive into more details about when unmoderated UX studies are effective.

When to use an unmoderated usability study

While this method offers speed and scalability, it isn’t the right fit for every situation. Here’s a breakdown of when it shines and when you might want to choose an alternative approach.

Ideal scenarios for unmoderated usability testing

- Validating high-fidelity prototypes at late stages

Unmoderated tests work best when your design is polished and close to final. At this stage, you’re less concerned with broad UX issues and more focused on fine-tuning details like navigation, task flows, or layout clarity.

That was the case with our client Populate, a healthcare startup. Once we designed high-fidelity Figma mockups, the Populate team tested them with real users — doctors. The results were invaluable, revealing key insights such as how dropdown lists could save time and improve the user experience for their audience.

- Quick feedback at scale

If your goal is to gather insights from a larger group of participants in a short amount of time, unmoderated testing is your choice. Without the need to schedule live sessions, you can collect data rapidly and efficiently.

As Reddit user designtom stated:

The main benefit is that you can set up a scenario on Monday afternoon and have a set of recordings by Tuesday morning. They’re nowhere near as rich or insightful as moderated, but they can be fast.

- Testing straightforward workflows

Simpler tasks, like exploring a single-page app or checking if content is easy to understand, are perfect for unmoderated testing. Because participants are working on their own, it’s best to avoid anything too complicated or unclear – those can lead to confusing or unreliable results.

As a Reddit user, Necessary-Lack-4600 put in one of the discussions:

Unmoderated is more of a fit if your design has had all the low hanging issues weeded out, and you don't need to know the "why", but there are important aspects that still need optimisation, like % tasks complete, limiting drop off points, time to complete or conversion rate where you often AB test different designs.

- Content and messaging validation

Unmoderated testing is particularly effective for content and messaging, especially in marketing. Since there’s no moderator present, users interact with the content the same way they would in the real world, without external guidance or bias. For example, when training newer researchers, one UX professional noted that moderators sometimes unintentionally influence results by answering participant questions or guiding their behavior. By removing that variable, unmoderated tests give a more accurate picture of how users respond to messaging and design elements.

When to avoid unmoderated usability testing

While an unmoderated user test can be incredibly useful, there are certain scenarios where it’s not the best fit. Here’s when you might want to consider other UX research methods:

- Early-stage prototypes

Unmoderated testing is not ideal for low-fidelity or early-stage designs that lack context or visual clarity. Participants often struggle to understand rough concepts without additional explanation, leading to frustration or irrelevant feedback.

Tip: Use moderated testing at this stage, as it allows you to guide users and gather deeper insights into their initial reactions. It may be more beneficial when you’re working with a rough design. You can clarify tasks and better understand the reasons behind user struggles.

- Complex workflows

Tasks with multiple branching paths or workflows that needs a lot of explanation for users to understand are challenging to test without a moderator. In these situations, users may misinterpret instructions or skip steps which consiquently may cause incomplete or confusing results.

For example, a Reddit user purpleprin6 shared that unmoderated tests work best for simpler designs with clear paths, such as high-fidelity prototypes. They added:

I mostly used unmoderated for high-fidelity prototypes where there’s basically only one journey to click through and the user couldn’t get too off course.

So, if your workflow involves multiple decision points or nuanced interactions, opt for moderated testing. A moderator can clarify steps and ask follow-up questions to uncover deeper insights.

3. Emotionally nuanced tasks

Unmoderated tests often fall short when exploring tasks that rely on emotional engagement or subjective responses. Without a moderator to observe body language, tone, or other emotional cues, it’s easy to miss critical insights.

For example, imagine you need to test the emotional resonance of a new ad campaign or an onboarding experience. To effectively understand people’s reaction you may need to observe the process in real-time and be able to communicate with testers.

Tip: If emotional depth or empathy is central to your research goals, go for moderated testing.

4. When follow-up questions are essential

If you anticipate needing to ask “why” or dig deeper into user decisions, unmoderated testing isn’t the right fit. Without a live conversation, it’s harder to get context behind user actions or misunderstandings. If you’re trying to uncover motivations or understand specific behaviors, moderated is a better option.

Now that you know when unmoderated usability testing is the right choice, let’s look at how to run one effectively.

Step-by-step guide to running unmoderated usability tests

Imagine this: you’ve just launched a new feature, and your team is eager to know if users can figure it out. You send out a test to real users, and within hours, recordings start rolling in. Some users complete the task seamlessly, while others hit a roadblock at the same spot. With every click and hesitation recorded, you can pinpoint exactly where your design needs work — all without sitting in on a single session.

The unmoderated study can feel like a behind-the-scenes look into how users experience your product, but only if you set it up correctly. Let’s break down the process into seven clear steps.

Step 1: Define your goals

Every test begins with a clear purpose. What are you trying to learn? There are a lot of useful usability metrics like task completion rates, navigation ease, or messaging clarity, your goal will shape everything from participant selection to data analysis.

Though this first step may sound obvious and generic, it’s super important specifically for unmoderated tasks where you won’t have the ability to lead a live conversation. A clear goal ensures that the test stays focused and participants provide insights aligned with your objectives.

Examples of goals for unmoderated research:

- Task success: Determine if users can complete a key action, like signing up for a newsletter or finding a product in a catalog.

- Navigation clarity: Test whether users can easily locate specific pages, such as “Pricing” or “Contact Us.”

- Content comprehension: Assess whether users understand the message on a landing page or in-app tutorial.

- Feature usability: Validate whether a new feature, like a search filter or dropdown menu, works as intended.

- Time-on-task: Measure how long it takes users to complete specific actions, such as making a purchase or booking an appointment.

Step 2: Choose the right tool

Unmoderated usability testing tools are essential, they take the place of a moderator, guiding participants through tasks, recording their actions, and collecting their feedback. Choosing the right tool isn’t just about convenience; it’s about ensuring your test runs smoothly and gives you reliable insights.

Here are a few things to consider when picking a tool:

- Ease of use: Is the tool intuitive for both your participants and your team? If participants struggle with the platform itself, it could skew your results.

- Participant recruitment: Does the tool offer access to a participant pool, or will you need to find your own? This can save a lot of time, especially if you’re testing at scale.

- Data collection and analysis: Think about what kind of data you need — are you looking for video recordings, click-path tracking, or survey results? Choose a tool that aligns with your goals.

- Scalability: If you’re planning to test with a large group, make sure the tool can handle the volume without glitches.

- Cost: Some tools are budget-friendly, while others come with advanced features and higher price tags. Decide what fits your budget without compromising on essentials.

While we won’t go into specific tools here, we’ll explore them in detail later on (after we go through all steps). The dedicated tools section will break down some of the top options, highlighting their features and use cases to help you make an informed decision.

The last tip about choosing the right UX research tool is about keeping things simple: if you’re new to unmoderated testing, go for a tool with a straightforward interface. Overly complex options can add unnecessary friction, especially when you’re still learning the ropes.

Step 3: Write explicit instructions

Clear and detailed instructions are the foundation of unmoderated UX studies. Since there’s no moderator to guide participants or clarify tasks, your instructions must stand on their own. Missteps may frustrate users and lead to incomplete tasks, or irrelevant data.

Here are some tips to prepare clear instructions:

1. Be painfully clear

Participants interpret tasks literally, so vague prompts can easily confuse them. Write instructions as if you’re explaining to someone seeing your product for the first time.

Practical tips:

- Use step-by-step instructions: Break tasks into smaller, specific actions.

Instead of: “Explore the website.”

Try: “Click on the ‘About Us’ page. Scroll down to find the contact information and describe what you see.”

- Avoid ambiguous language: Replace terms like “explore,” “check,” or “look” with precise actions like “click,” “scroll,” or “describe.”

Reddit user tiredandshort shared how participants often misunderstand vague phrases, leading to unintended actions.

You have to be excrutiatingly careful with your words. Like I could write “Imagine xyz scenario where you have to google something” and then they will go and actually google it. I can’t just be like “imagine xyz scenario” because they will think THAT in itself is the instruction. To be fair, it IS pretty interesting to just see what their first instinct is on what to google but still. But it does make it really difficult because then they jump around in the tasks and get frustrated or confused because they think they have already completed it. Hopefully this time my test goes better because after that little “imagine” intro, I put “We will provide you with the sites. Please click continue

2. Set expectations upfront

Participants need to know the rules before they begin. Establish clear guidelines to keep them focused and avoid unnecessary errors.

What to include in your instructions:

- State whether they can click on anything or must just observe.

Example: “WITHOUT CLICKING, tell us where you’d expect to find the checkout button.”

- Explain what they should do after completing a task.

Example: “After you’ve finished exploring the page, click ‘Next’ to move to the next task.”

You can try capitalizing certain words for clarity, such as “STOP after completing the task” or “CLICK the ‘Submit’ button” to ensure testers understand what they are supposed to do.

3. Keep tasks focused and manageable

Avoid overloading participants with too many steps or overly complex workflows. A series of shorter, focused tasks is easier for participants to follow and results in more reliable data.

Practical tips:

- Limit each task to 2-3 steps.

- Use separate tasks for different goals to prevent confusion.

As we already defined, unmoderated testing works best for straightforward workflows where you don’t need to ask “why.”

4. Test for different contexts

Participants might use various devices or browsers during the test. Ensure your instructions apply across platforms and are not biased toward one setup.

If you’re testing a mobile app, include instructions like: “Use your smartphone for this task, and describe any issues with navigation or text readability.”

5. Consider adding examples

Where appropriate, include examples to clarify what you’re asking for. For instance:

Example for feedback: “If a page looks cluttered, write: ‘The images and text overlap, making it hard to read.’”

For a great pool of user testing questions, check out our article.

Step 4: Pilot your test

Even the most carefully written instructions can be misinterpreted. Before launching your test, run a pilot with 1-2 participants to identify potential confusion or gaps. It can save hours of frustration later.

Tips for a successful pilot:

- Use participants unfamiliar with the project to simulate a real test environment.

- Observe where they hesitate or deviate from the expected path, and revise accordingly.

- Keep instructions simple and test-specific. Avoid overloading participants with too much detail.

Step 5: Recruit participants

The quality of your insights depends on the relevance of the people testing your product. There are basically two main ways to find beta testers:

- Tap into your network: I mean the people you know – friends, colleagues, or coworkers who fit your target audience. While this is often the easiest option, be cautious of bias, as they may not provide the most objective feedback.

- Use recruitment platforms: Many usability testing tools offer access to a pool of testers. These platforms make it easy to find participants that match your demographics, from age and location to industry experience. We’ll dive into these tools in the next section.

Tips for recruiting the right participants:

- Define your target audience clearly: Think about who your product is for and what characteristics your ideal tester should have. For example:

Are they existing customers or new users?

Do they need prior experience with similar tools or features?

- Screen participants carefully: Use a screener survey to ensure participants align with your goals. Ask relevant questions, such as:

“Have you used [a similar app/tool] before?”

“How often do you perform [a specific task your product helps with]?”

Avoid leading questions to prevent bias.

- Aim for diversity: Include participants with different backgrounds, experiences, and skill levels. A diverse pool helps uncover usability issues you might miss with a homogenous group.

- Offer incentives: To encourage participation, consider offering rewards like gift cards, discounts, or free product access. Keep the incentive proportional to the time commitment.

In general, recruiting process is similar to interviewing users when looking for a product-market fit. And a final advice from a Reddit community by ConservativeBlack:

“If you choose to recruit from your own panel, I'd recommend still sending them a 5-7 question screener and having qualified users schedule an interview time.”

Step 6: Run the test

This is where participants independently complete the tasks you’ve prepared, giving you a glimpse into how they interact with your product without outside influence. Here’s how to ensure the process runs smoothly:

1. Monitor participation: Once the test is live, check the progress periodically to ensure participants are completing the tasks as expected. Tools often display metrics like completion rates and time-on-task, so you can identify any potential issues early. As I mentioned before, it’s important to pilot testing instructions beforehand. You may note how even minor ambiguities can cause participants to misinterpret tasks.

2. Encourage natural behavior: Make sure participants know they’re free to explore and interact with the product as they would in real life. Avoid instructions that feel restrictive or artificial. You may give the following instruction: “Pretend you’re searching for a new pair of running shoes. Start by looking through the navigation menu and explain how you’d find what you need.”

3. Test in realistic environments: Participants should ideally complete tasks in the same environment where they’d normally use your product. For example, if you’re testing a mobile app, encourage participants to use their smartphone rather than a desktop simulator.

4. Avoid overloading participants: Unmoderated tests can be tiring, especially if they involve long or overly complex tasks. Keep your test short and focused, 15-20 minutes maximum. Break larger goals into smaller, manageable tasks if needed.

5. Let the data roll in: Once the test is live, let participants work at their own pace. Avoid hovering over the data too soon. Give enough time for a meaningful sample to complete the test. If you notice a common problem early on, pause the test and refine your instructions before resuming. This ensures better quality data from the remaining participants.

Step 7: Analyze the results

Once the data starts rolling in, the real work begins. Analyzing results from unmoderated usability tests can feel overwhelming, especially if you’re dealing with hours of recordings or detailed metrics. The key is to stay organized and focus on patterns rather than getting lost in individual cases. Here’s how to streamline the process:

- Start with the big picture

Begin by looking at overarching metrics like task success rates, completion times, and error frequencies. These numbers give you a clear idea of where users are succeeding or struggling.

- Use tools to speed up analysis

Most unmoderated testing tools provide features like video playback, heatmaps, or automated insights to help you analyze data efficiently. Use these features to identify trends without manually reviewing every detail.

As Reddit user shared:

- Look for patterns

If one participant struggles with navigation, it might be a one-off. But if several participants get stuck at the same step, it’s likely a usability problem that needs fixing.

- Use taggs and notes

Use tagging features in your tool to mark key moments during video reviews, like when a user hesitates, backtracks, or comments on confusion. As well, you can timestamp important moments during video reviews and use them to focus your analysis. Some tools even let you aggregate notes for easy sharing.

- AI tools for summarization

Using AI tools to summarize transcripts can cut down review time significantly, though the quality varies depending on the platform.

- Combine quantitative and qualitative insights

Metrics tell you what happened, but participant comments and behavior explain why it happened. Combine these data points for a more comprehensive understanding of your results.

- Summarize actionable takeaways

The goal of analysis is to identify clear, actionable insights. Organize your findings into categories like navigation, design, or content, and prioritize issues based on their impact on user experience.

Sample takeaway format:

- Issue: Users struggled to find the checkout button.

- Evidence: 70% of participants failed to locate it, and many commented it wasn’t visible.

- Solution: Increase the button size and use a contrasting color.

When approached thoughtfully, each of these steps contributes to a smoother process and more reliable insights. Of course, no method is without its challenges. In the next section, we’ll look at common pitfalls in unmoderated usability testing—and how to avoid them.

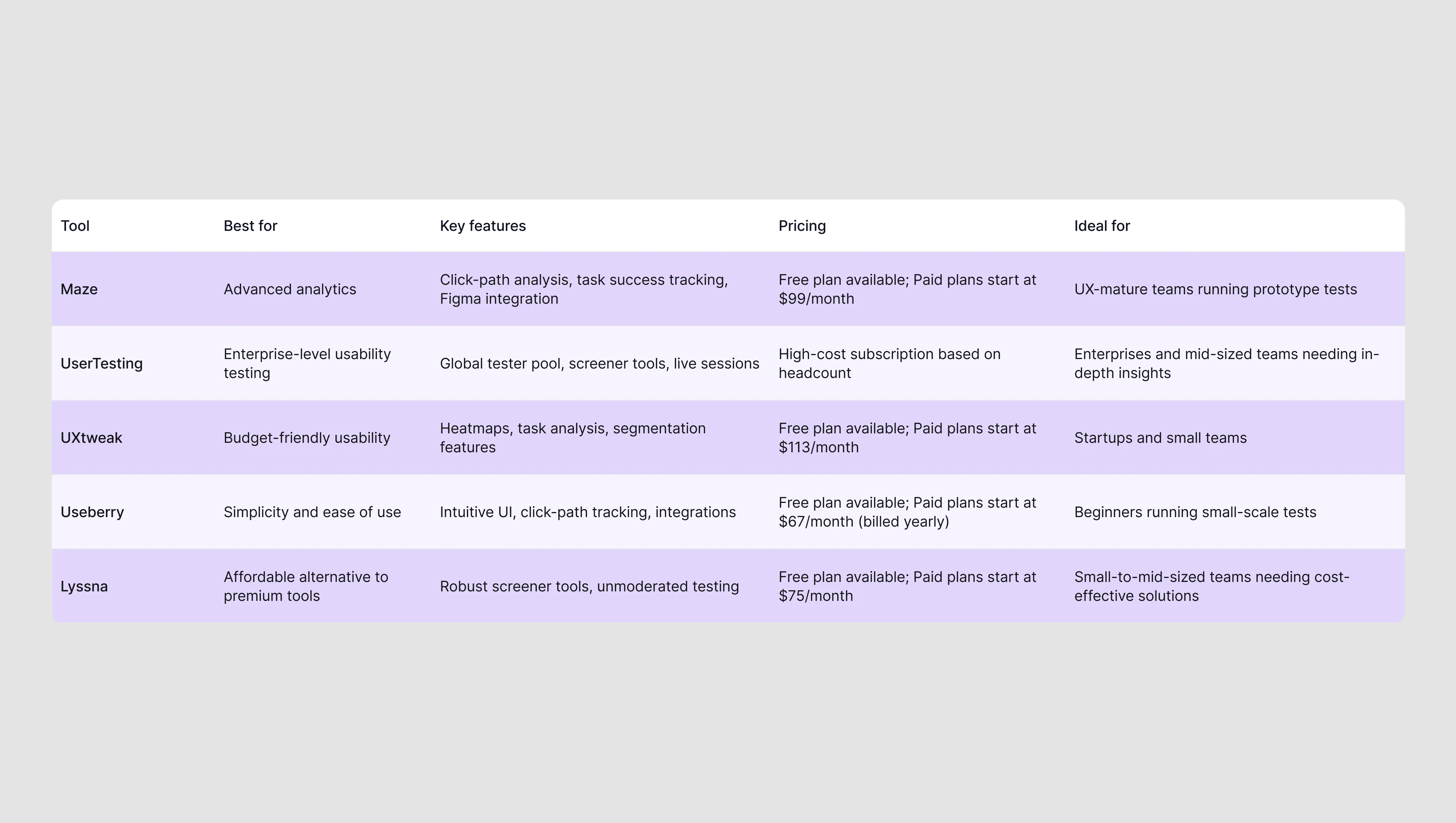

Tools for unmoderated usability testing

As I promised earlier, it’s time to explore the tools that make remote usability testing possible. I included the tools we love and use at Eleken and those highly recommended by the broader UX community, making them reliable choices for anyone looking to conduct effective tests.

I’ll walk you through each tool, explaining what makes it unique, what it’s best for, and any limitations to consider. Afterward, you’ll find a handy comparison table to help you decide which one fits your needs best.

1. Maze

Maze is an Eleken’s favourite. It’s a go-to tool for UX-mature teams that want detailed, data-driven insights. It stands out for its robust analytics and seamless integrations with design tools like Figma, making it especially useful for prototype testing.

- What it does well: Maze specializes in click-path analysis, task success tracking, and user behavior analytics. These features help teams validate designs quickly and at scale. Maze allows an option to recruit the right audience.

- Why it’s loved: Teams running frequent tests appreciate Maze’s ability to automate repetitive tasks and generate easy-to-read reports.

- Who it’s best for: Reddit user Personal-Wing3320 noted:

I consider MAZE as a tool prefered by more UX mature companies that rub user testing and usability testing weekly.

So it suites for companies that already have a strong UX process in place and need a tool to handle detailed testing scenarios. From our experience, we can say that it also suits companies that don’t have access to real their users but need to quickly test a hypothesis.

This video by Crema demonstrates how to conduct an unmoderated test with Maze well.

2. UserTesting

UserTesting is one of the most widely recognized tools for UX research (both moderated and unmoderated testing). It’s particularly popular among enterprises due to its powerful recruitment capabilities, advanced tools for remote user testing, and focus on delivering high-quality insights at scale.

- What it does well: UserTesting allows you to run live sessions to observe users in real-time or create unmoderated tests for faster feedback. Its screener tools help you recruit participants that match your ideal user persona. The platform also features video recording, tagging, and editing tools, making it easy to highlight key moments and share findings with your team.

- Why it’s loved: Enterprises appreciate UserTesting for its versatility, offering both live and asynchronous testing options and seamless integration with other tools. Teams find the ability to filter participants based on specific demographics or behaviors invaluable for gathering targeted insights.

- Who it’s best for: Enterprises and mid-sized teams with the budget to invest in a feature-rich testing tool. It’s ideal for those running frequent tests and needing in-depth participant screening and advanced analysis tools.

- What to consider: The platform’s biggest drawback is its cost. It’s primarily designed for enterprises, and while it’s incredibly powerful, the pricing may not suit startups or smaller teams with limited budgets.

3. UXtweak

UXtweak is a budget-friendly option of unmoderated usability testing tools that punches above its weight in terms of features. It’s particularly well-suited for startups and smaller teams looking to get detailed insights without spending too much.

- What it does well: UXtweak offers heatmaps, task analysis, and segmentation features. These tools allow you to analyze specific user groups, making it easy to understand different behaviors.

- Why it’s loved: It provides many of the advanced capabilities of higher-priced tools at a fraction of the cost. As well users like it for the level of control the tool gives – you define what you want users to do and track the process step-by-step.

- Who it’s best for: Small teams or startups that need powerful analytics but don’t have the budget for premium platforms.

4. Useberry

Useberry is known for its simplicity, making it perfect for teams new to unmoderated usability testing. Its intuitive design and beginner-friendly setup allow teams to get started quickly with minimal training.

- What it does well: Useberry specializes in prototype testing, offering visualizations like click-path tracking to help teams understand user behavior.

- Why it’s loved: Its clean, easy-to-use interface is ideal for smaller projects or teams with limited experience in usability testing.

- Who it’s best for: Beginners or small teams testing straightforward workflows. One Reddit user noted that it’s intuitive and user-friendly, perfect for smaller projects.

5. Lyssna

Lyssna is an affordable alternative to premium tools like UserTesting. It offers a budget-friendly solution for both unmoderated tests and participant recruitment. Designed for teams that need strong functionality without enterprise-level costs, it’s a great fit for startups and small-to-mid-sized teams.

- What it does well: Nice participant recruitment with robust screener capabilities available across all plans. Whether you’re testing a prototype or collecting feedback on a live product, Lyssna simplifies the process and delivers actionable insights.

- Why it’s loved: Teams appreciate its affordability and versatility. Lyssna handles everything from task setup to data analysis, offering a seamless experience for conducting unmoderated usability tests. It’s particularly valuable for prototype testing, where you need quick, accurate feedback from the right users.

- Who it’s best for: Small-to-mid-sized teams, startups, or those looking for an affordable yet reliable tool for participant recruitment and usability testing. It’s an excellent option for teams with tight budgets who still want access to high-quality insights.

Now that we’ve explored the strengths and ideal use cases of each tool, here’s a quick comparison to help you decide which one fits your needs best.

In case you’re interested to learn more on this topic (not only about tools for unmoderated study,) make sure to check our article on 15 best usability testing tools.

Beginner-friendly checklist for unmoderated usability testing

This checklist works like your UX research plan and distills everything we’ve covered in the article into an easy-to-follow guide, perfect for beginners. Follow these steps to stay on track from preparation to optimization, and at the end, you’ll find a link to download a printable version for quick reference.

Preparation

- Clearly define goals: Specify what you want to learn. Example: Are you testing task success rates, navigation clarity, or user engagement?

- Draft step-by-step instructions: Break down tasks into simple actions and write instructions for each.

- Choose the right tool: Pick a tool that aligns with your goals, budget and offers participant recruitment if needed.

- Pilot test: Test with 1–2 participants first to catch confusing instructions or technical glitches.

Execution

- Recruit participants: Use tools with built-in pools or reach out to friends, colleagues, or beta testers who match your target audience.

- Screen participants carefully: Ask 5–7 screener questions to ensure participants meet your criteria.

- Keep sessions short: Limit tests to 15–20 minutes and focus on 2–3 key tasks per session.

Analysis

- Review efficiently: Use playback tools to speed up video review (e.g., 1.5x speed) or rely on transcripts to identify key moments quickly.

- Tag notable moments: Highlight user hesitations, backtracking, or confusion during video reviews to pinpoint usability issues.

- Focus on key metrics: Look at success rates, error rates, and time-on-task as starting points for analysis.

Optimization

- Reflect and refine: Identify what worked and what didn’t. Did participants misunderstand a task? Adjust your instructions for future tests.

- Summarize actionable insights: Format findings as issues, evidence, and solutions.

- Iterate for future tests: Use feedback to improve your methodology and streamline future usability studies.

Putting unmoderated usability testing into action

Unmoderated usability testing is a powerful tool for gathering user insights at scale. It offers flexibility and efficiency that few other methods can match. But like any tool, its effectiveness lies in how you use it. From setting clear goals and writing explicit instructions to selecting the right tool and analyzing results thoughtfully, every step we’ve outlined plays a role in ensuring your tests deliver actionable insights.

Here’s the big takeaway: Start small, learn as you go, and refine your approach with each test. Begin with straightforward workflows or high-fidelity prototypes, and use the feedback to iterate. Testing doesn’t have to be perfect from the outset, but it should always be intentional. In case you have an opportunity, mix it with moderated tests.

If you’re ready to get started, use the beginner-friendly checklist from this article. And if you’re looking for an expert team to seamlessly integrate usability testing into your UX research process, reach out to Eleken. We’ve helped countless clients create user-centric designs, and we’d love to help you do the same.

Remember: testing is not just about fixing problems — it’s about understanding your users and designing experiences that meet their needs.